Culpa qui officia deserunt mollit anim id est laborum. Sed ut perspiciatis unde omnis iste natus error sit voluptartem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi ropeior architecto beatae vitae dicta sunt.

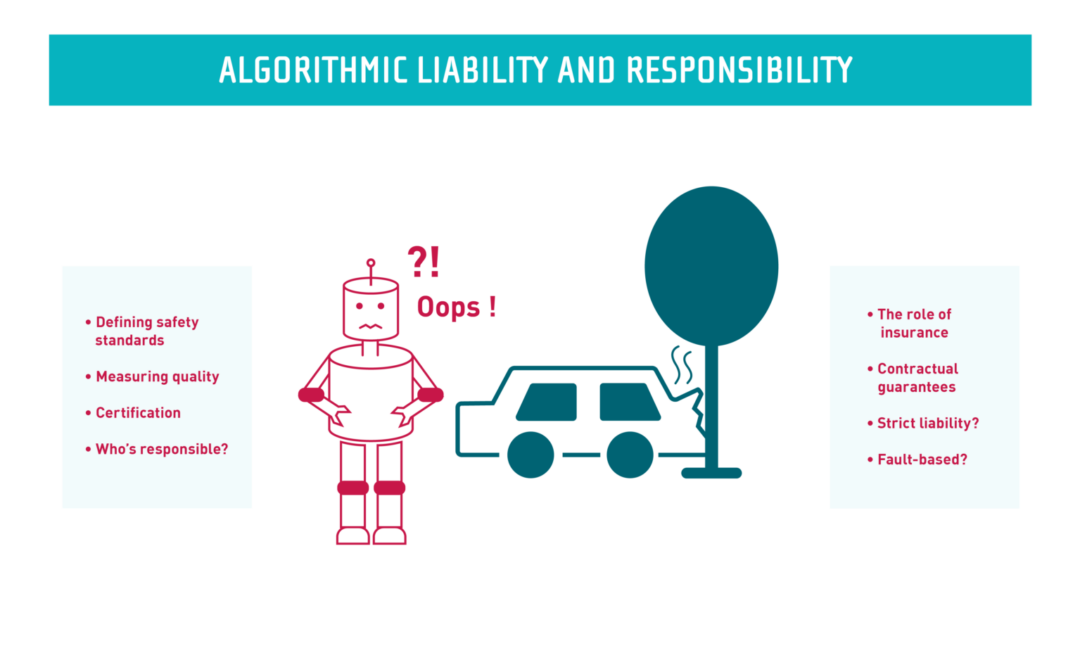

i.e. who should pay for damages caused by an AI system, and prospective responsibility, i.e. how to ensure that designers, operators and users of AI systems have incentives to minimize harm to others in the future.

Research in this area began in the first quarter of 2020.

The research news and events related to the axis will be published progressively on this page.

If you are interested in participating in the research work of this axis, please contact David Bounie or Winston Maxwell.

Our research and teaching gravitate around the most pressing problems facing high-risk AI use cases, regardless of the kind of technology deployed (GenAI, deep learning, symbolic AI)