« Everyone would like the algorithms to be explainable, especially in the most critical areas such as health or air transport. There is a real consensus in this area. »

Winston Maxwell, director, law and technology studies at Télécom Paris

Devising new methods to model’s prediction reliability, including the ability to abstain from making a decision, and to match reliability tools with relevant legal/ethical requirements

Winston Maxwell, director, law and technology studies at Télécom Paris

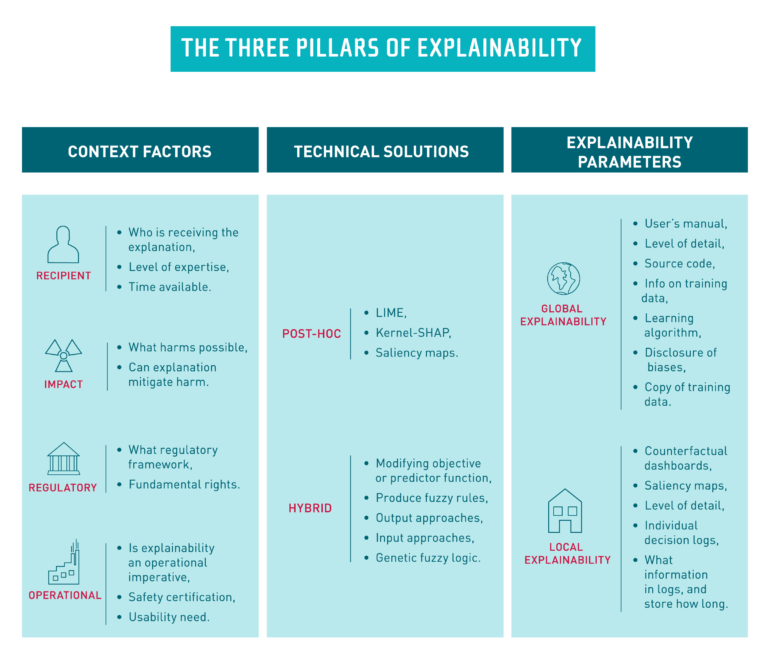

This diagram presents a framework for defining the « right » level of explainability based on technical, legal and economic considerations.

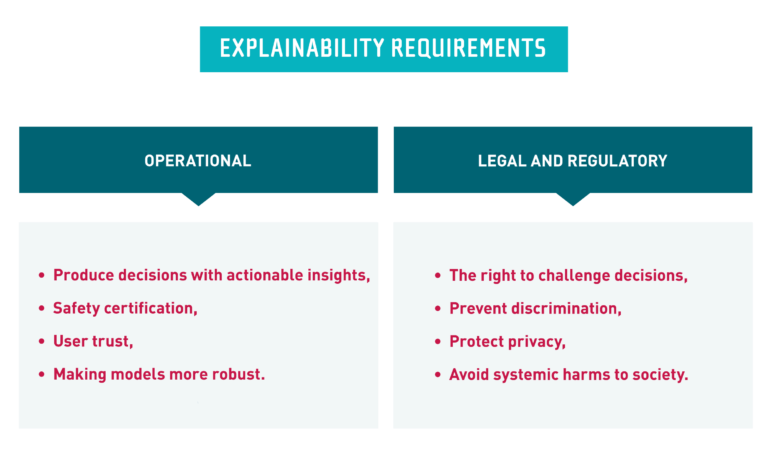

There are operational reasons for explainability, including the need to make algorithms more robust.

There are also ethical and legal reasons for explainability, including protection of individual rights.

It’s important to keep these two sets of reasons separate.

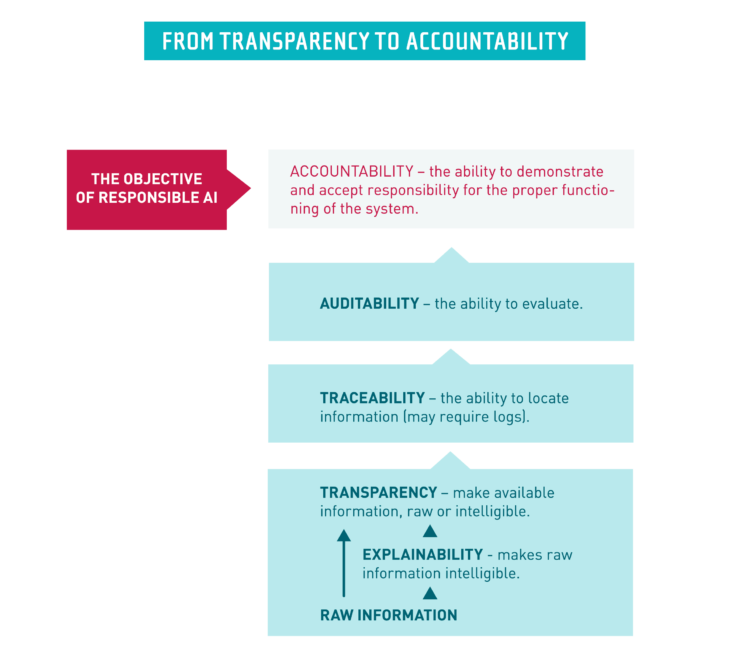

Explainability takes raw information and makes it understandable to humans.

Explainability is a value-added component of transparency.

Both explainability and transparency enable other important functions, such as traceability, auditability, and accountability.

Global explanations give an overview of the whole algorithm.

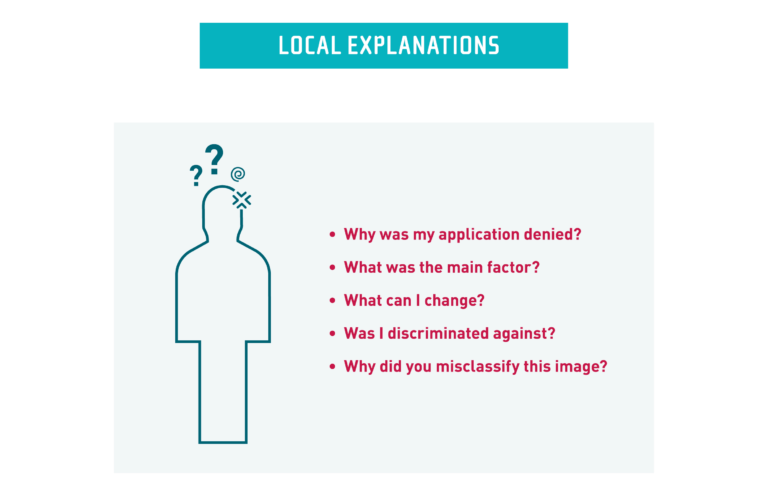

Local explanations provide precise information on a given algorithmic decision.

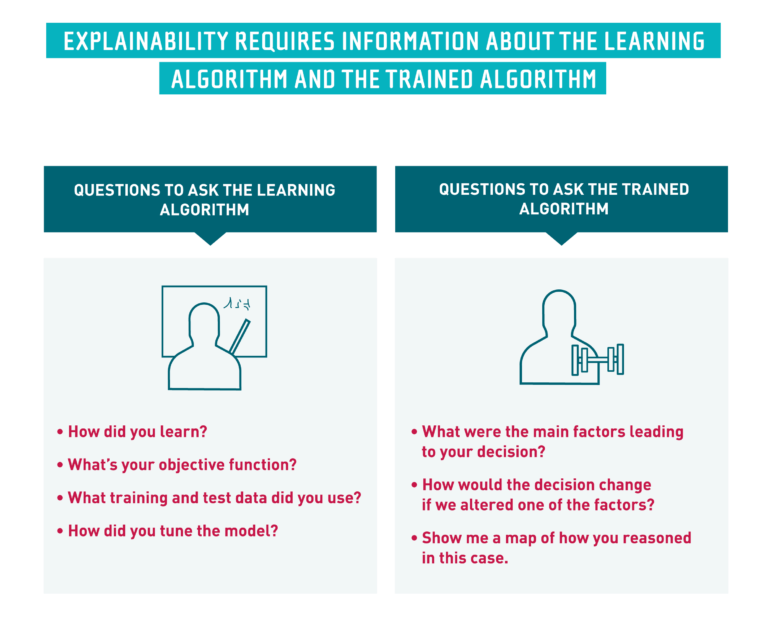

Explanations may be needed regarding the learning algorithm, including information on the training data used.

Explanations relating to the learned algorithm will generally focus on a particular algorithmic decision (local explanations).

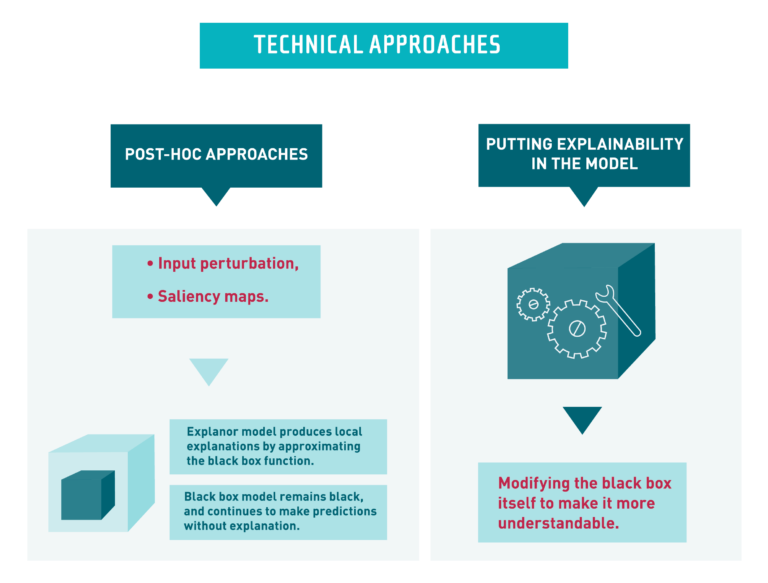

Post-hoc approaches try to imitate the functioning of the black-box model.

Hybrid approaches try to put explainability in the model itself.

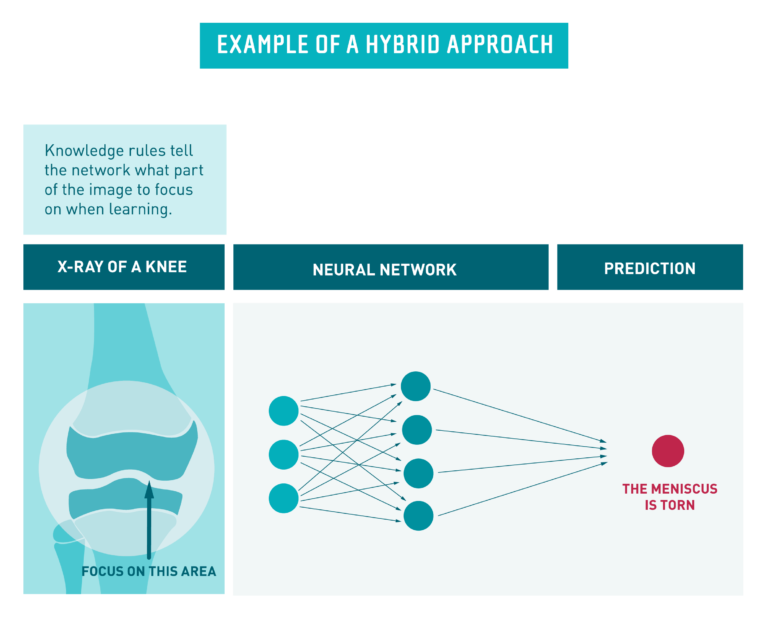

A hybrid approach may teach the algorithm to look at the right area of the image, based on domain expertise, in this case radiology.

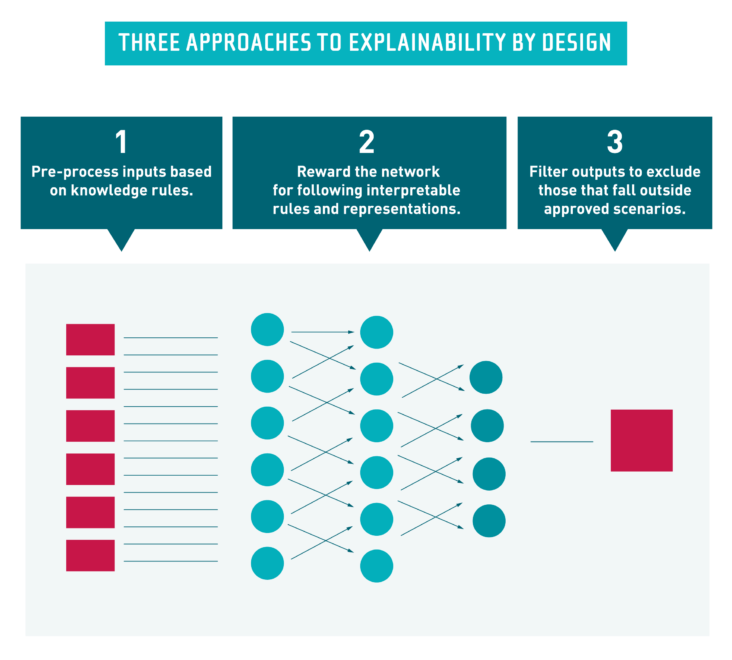

Hybrid AI approaches can focus on the inputs, on the network itself, or on the outputs.

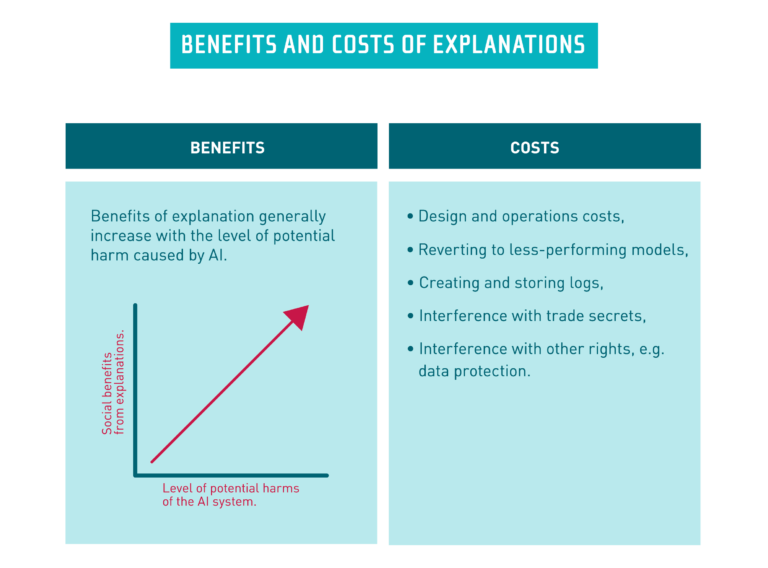

Finding the right level of explainability requires a consideration of the benefits and costs associated with an explanation in a given situation. For an explanation to be socially-useful, total benefits should exceed costs.

Our research and teaching gravitate around the most pressing problems facing high-risk AI use cases, regardless of the kind of technology deployed (GenAI, deep learning, symbolic AI)