Operational AI Ethics research is organised around both publicly and privately funded research chairs. This page shows highlights.

Through publications of our professors and PhD students, explore the social, ethical and legal issues associated with AI

Our research is made possible thanks to collaboration with private and public sector actors

AI and society

Tiphaine Viard, associate professor

I am particularly interested in mixed-methods approaches, reducing the (perceived) schism between quantitative and qualitative methods, as well as research-action methods.

Thomas Le Goff, Associate Professor

The “AI and Environment” research axis investigates the impact of AI technologies on the environment, examining how AI systems can be strategically employed to enhance environmental preservation efforts, optimize resource management, and contribute to sustainable development. The pressing environmental issues of our time require innovative, efficient, and scalable solutions. AI presents a unique set of tools and methodologies that can be used to tackle complex environmental problems. By leveraging machine learning, data analytics, and predictive modelling, we can contribute to protecting biodiversity and ecosystems, reducing humans’ impact on the environment and build robust strategies for mitigating climate change impact.

For example, AI is used in the energy sector to optimize the production of low carbon electricity (see Metroscope’s software of predictive maintenance for power plant or data centres), to better predict renewable energy production or to increase energy efficiency (see Enerbrain).

Simultaneously, we need to analyze the potential environmental footprint of AI technologies, addressing challenges associated with energy consumption and waste generation so that AI systems do not become an ecological problem on their own. Recently, the famous company HuggingFace published a paper, co-written with researcher Emma Strubell, which highlights, once again, the outstanding cost to the environment brought by new generative AI models, given the amount of energy these systems require and the amount of carbon that they emit.

In our rapidly evolving world, the intersection of AI and environmental sustainability stands as a promising frontier for transformative research.

Research Priorities:

Vinuesa R., Azizpour H., Leite I. et al. (2020). The role of artificial intelligence in achieving the Sustainable Development Goals, Nature Communications, 11, 233.

Melanie Gornet, PhD Student

In her research, PhD student Melanie Gornet focuses on the regulation of artificial intelligence, encompassing social, legal and technical aspects. Notably, she has been studying the relationship between technical standards, the CE marking that will be required for high-risk AI systems under the AI Act, and respect for fundamental rights. Her papers and conference presentations look at the multiple standardization initiatives around “trustworthy AI”, including standards that attempt to address bias and non-discrimination. She asks the question whether it is ever possible to certify that an AI system complies with fundamental rights? The challenge stems from the connection between fundamental rights and the specifics and context of each case. Only a judge can decide, for example, whether a certain level of residual bias in a facial recognition system is acceptable, or whether, on the contrary, it creates illegal discrimination. While technical standards cannot set the exact level of tolerance with regard to fundamental rights, they can play a role in establishing common terminology or acting as a toolbox by defining design methods and means of measurement. Melanie Gornet’s other projects involve the study of AI ethics charters and manifestos, fairness in facial recognition systems, metrics of explainability, and AI audit methodology.

[paper][poster] Gornet, M., Viard, T., Mapping AI Ethics: A Quantitative Analysis of the Plurality, and Lack Thereof, of Discourses, 2023.

The European AI Act takes a product safety approach, relying heavily on technical standards. My work explores the intersection between technical standards and AI principles such as fairness. Many AI systems will require trade-offs, for example between fairness and performance, or between individual fairness and group fairness. Technical standards are useful, but may be of little help in defining what is an ‘acceptable’ trade-off.

Astrid Bertrand, PhD Student

Joshua Brand, PhD Student

Philosophy and explainable AI?

Why do we need Moral Philosophy in our research on Explainable AI (XAI)? XAI is widely accepted as a requirement for trustworthy AI systems, for example when deploying AI in the financial sector for anti-money laundering efforts. To do any technical, policy, or legal work, however, we often simply accept the need for explainability and start from this assumption to decide its technical limitations and how to best proceed with its development and implementation. Yet, among all this excellent work discussing new technologies, legal clarifications, and implementation insight, we nevertheless need to eventually answer the simple, yet foundational normative question: Why ought we do this? Why do we need XAI? Before we implement and discuss the practical uses of XAI techniques we need to provide robust justification for its use beyond merely appealing to some document or an arbitrary reason that “someone said so”.

Answering this question through the critical analysis lens of moral philosophy is important because XAI methods are not easy to implement. They can provide beneficial explanations that help support accountability and auditing measures, yet are less efficient and contrast with the fast, relatively autonomous, “black-box” machine learning models. Robust justification is necessary to show those who implement AI that even though it may slow down their decision-making processes, it is morally right, or necessary, to implement XAI.

This is something I recently tackled in my paper on XAI and public financial institutions (see link below) where I argued that it was embedded in the public financial institution identity to only employ XAI—explainability for these banks is beyond a mere preference, but essential to their identity.

With this justificatory work that grounds and clarifies the use of explainability, we better understand its limitations and where and how its development and implementation should be directed.

If we look into the philosophical foundations of AI ethics, we see deep disagreements about the nature and future of humanity, science, and modernity. Questioning AI opens up an abyss of critical questions about human knowledge, human society, and the nature of human morality.

…philosophy is not a subject. It’s a discipline, designed to address the various forms of philosophical perplexity to which any reflective human being is subject.

Explaining decisions shows respect for the recipient’s humanity and vulnerability. Where AI supports human decision-making, the human decision-maker must be able to explain and justify her decision to the person affected. Explanations are a key characteristic of human-to-human interactions, and AI systems must ensure that this human characteristic is preserved, even where black box algorithms are used.

The Intelligent Cybersecurity for Mobility Systems (ICMS) chair will focus on how on-board vehicle systems can resist cyber attacks with the help of AI.

Winston Maxwell and Thomas Le Goff of the operational AI ethics team will be leading the research theme devoted to regulatory, data protection and data sharing aspects.

Winston Maxwell, Professor of law

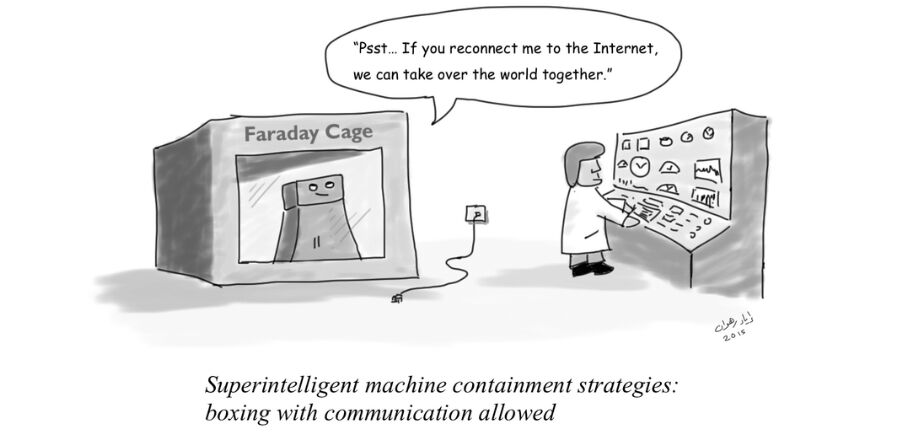

Human control over AI systems. How do we ensure that human control over AI systems is effective? Human control means different things in different contexts. Human-in-the loop, human-on-the loop, human-out-of-the-loop, are some of the terms used to designate different degrees of human control. Human control is one of the requirements of the AI Act for high risk systems. The European Court of Justice has also required human control for algorithmic systems that can have important impacts on fundamental rights. For medical diagnosis, effective control by the doctor is essential. International Humanitarian Law applicable to armed conflict requires human control over lethal weapon systems. We study human control in operational contexts, attempting to gauge the effectiveness of human control in light of different operational constraints. Explainability is of course one of the enablers of effective human control. But humans are not always good decision-makers, and sometimes human control can do more harm than good. But even if the human doesn’t always increase the objective quality of the final decision, having a human decision-maker is sometimes essential for human dignity, and what Professor Robert Summers calls “process values”.

Our research and teaching gravitate around the most pressing problems facing high-risk AI use cases, regardless of the kind of technology deployed (GenAI, deep learning, symbolic AI)

Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut ero labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco.