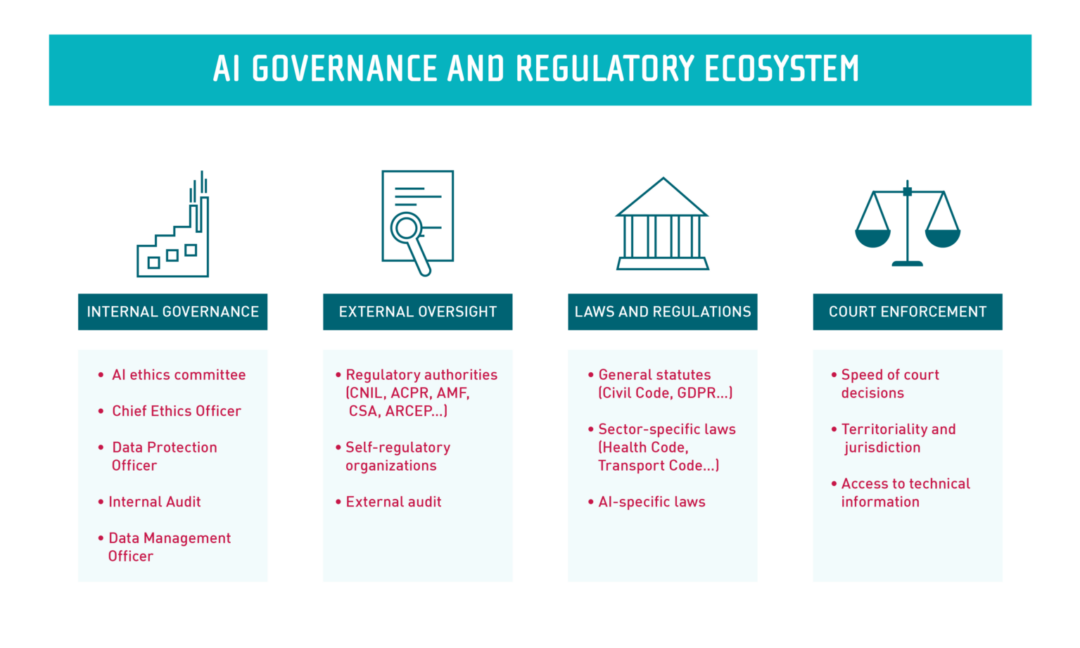

The question of AI governance and regulation is centered on the regulator ecosystem for AI: which tools in the regulatory toolbox ensure the optimal trade-offs between AI innovation and safety, for example.

Too much reliance on self-regulation results in under-protection of important public values and fundamental rights. There may be tension between sector-specific regulation (e.g. regulation on medical devices) and horizontal AI regulation. There may be a tendency to adopt new AI-specific rules when reliance on existing laws is sufficient. Internal governance frameworks within corporations can contribute to AI ethics, but need to be backed up by effective external enforcement.

These questions will form the core of the governance and regulation module of Operational AI Ethics.

Research in this area began in the first quarter of 2020.

The research news and events related to the axis will be published progressively on this page.

If you are interested in participating in the research work of this axis, please contact David Bounie or Winston Maxwell.

Our research and teaching gravitate around the most pressing problems facing high-risk AI use cases, regardless of the kind of technology deployed (GenAI, deep learning, symbolic AI)